24 Classification

In this part of the book we discuss classification methods, that is, methods to predict a qualitative or categorical response:

Figure 24.1: Clustering Corner

Some of the methods that we discuss in the next chapters are:

- Logistic Regression

- Canonical Discriminant Analysis

- Linear Discriminant Analysis

- Quadratic Discriminant Analysis

- Bayes’ Classifier

24.1 Introduction

The goal in classification is to take an input vector \(\mathbf{x}\) and to assign it to one of \(K\) discrete classes or groups \(\mathcal{C_k}\) where \(k = 1, \dots, K\). In the most common case, the classes are taken to be disjoint, so that each input is assigned to one and only one class.

24.1.1 Credit Score Example

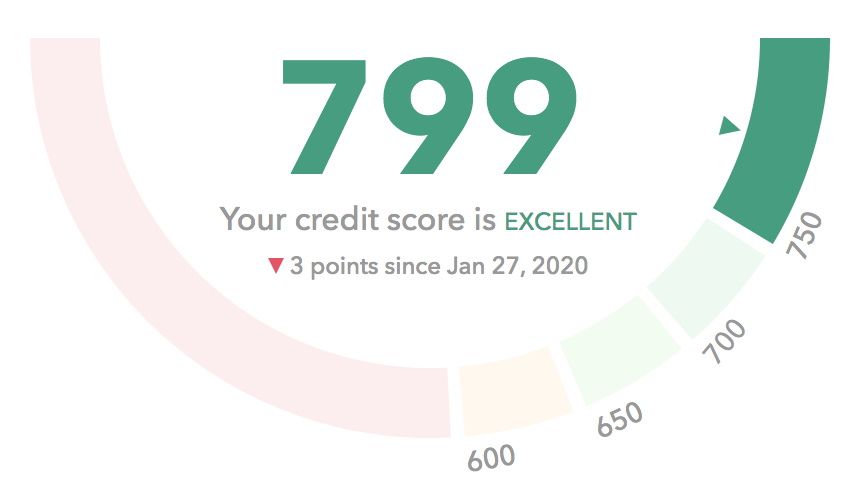

Your credit score is a three-digit number that relates to how likely you are to repay debt. This score typically ranges from 300-850. Banks and lenders use it to decide whether they’ll approve you for a credit card or loan.

Figure 24.2: Typical display of a Credit Score with a gauge chart

It’s not uncommon to have multiple different scores at the same time. For example, an auto lender might use one scoring model, while a mortgage lender uses another. Your scores are typically based on things like

On-time payments: This is your track record for paying bills on time. It has the most impact on your score.

Credit Usage: This is how much of your credit card limits you’re using.

Credit Type: The types of credit you have (credit cards, auto loans, student loans, mortgages, etc.)

Average Age of Credit: This is the average age of all your open credit cards and loans.

Total Accounts: The more credit cards and loans you’ve had, the more lenders trust you.

Credit Inquiries: A “hard” inquiry is when a lender checks your credit report.

Derogatory Marks: You’ll get a ding on your report if one or more of your accounts goes into collections or bankruptcy.

Credit Scores, at least those calculated in the US, claim that they never factor in personal information like your race, gender, religion, marital status or national origin.

24.1.2 Toy Example

Let’s consider a credit application from which \(p\) predictors are derived \(X = [ X_1, \dots, X_p ]\).

- Age

- Job type (and job seniority)

- Residential Status

- Marital Status

- Loan purpose

- etc

And suppose also that customers are divided in two classes: good and bad.

- Good customers are those that payed their loan back

- Bad customers are those that defaulted on their loan

Let’s bring our mental map back of the Supervised Learning diagram, and see how its elements get framed from a classification perspective.

Figure 24.3: Some elements of the Supervised Learning Diagram

From the previous diagram, we have three unknown distributions \(P()\):

- joint distribution of data: \(P(\mathbf{x}, y)\)

- conditional distribution of target, given inputs: \(P(y \mid \mathbf{x})\)

- marginal distribution of inputs: \(P(\mathbf{x})\)

The idea in classification problems is the following. Given a customer’s attributes \(X = \mathbf{x}\), to what class \(y\) we should assign this customer? Ideally (although not mandatory), we would like to know what is the conditional probability:

\[ P(y \mid X = \mathbf{x}) \tag{24.1} \]

For example, we can think of \(n\) individuals in a \(p\)-dimensional space. Moreover, assume that each class of customers forms its own cloud: the good customers, and the bad customers.

Figure 24.4: Cloud of n points in p-dimensional space

Now, assume that there are four individuals \(\{a, b, c, d\}\) for which we want to predict their classes.

Figure 24.5: To which class we assign new individuals?

It would be nice to have a mechanism or rule to classifiy observations. For example, we could end up with the following classifications. Customer \(a\) could be assigned to class bad. Customer \(d\) could also be assigned to class bad with high confidence. In turn, customer \(c\) coud be assigned with high confidence to class good. What about customer \(b\)? We could be uncertain to which class this individual belongs.

Figure 24.6: Some possible classifications

Having a classification rule allows us to divide the input space into regions \(\mathcal{R}_k\) called decision regions (one for each class). The boundaries between decision regions would establish decision boundaries or decision surfaces.

Figure 24.7: Two possible decision boundaries

For example, one decision rule could result in a linear decision boundary, while another decision rule could result in a nonlinear decision surface.

24.1.3 Two-class Problem

In our credit application example we have customers belonging to one of two classes: \(\mathcal{C}_1 =\) good, and \(\mathcal{C}_2 =\) bad.

Figure 24.8: Cloud of n customers in p-dimensional space

Since this is a supervised learning problem, we start with some available evidence (i.e. training data set). A first step may involve studying how \(X\) values vary according to a given class \(\mathcal{C_k}\). In other words, we may start exploring the class-conditional distribution of

\[ P(X = \mathbf{x} \mid y = k) \tag{24.2} \]

For instance, we may ask “How does \(X_j \mid y = 1\) compare with \(X_j \mid y = 2\)?” Similarly, “How does \(X_q \mid y = 1\) compare with \(X_q \mid y = 2\)?”

Figure 24.9: Exploring conditional distributions

Typically we have descriptive information about \(X \mid y = k\). We can calculate summary statistics, and compare visual displays of these distributions. If we found a way to get the class-conditional distribution \(P(X \mid y = k)\), we could compute:

\[ P(X = \mathbf{x} \mid \text{Good}) = \frac{P(\text{applicant is Good and has attributes } \mathbf{x})}{P(\text{applicant is Good})} \tag{24.3} \]

and

\[ P(X =\mathbf{x} \mid \text{Bad}) = \frac{P(\text{applicant is Bad and has attributes } \mathbf{x})}{P(\text{applicant is Bad})} \tag{24.4} \]

However, the probability that we are interested in at the end of the day is the conditional probability \(P(y = k \mid X = \mathbf{x})\). This is the unknown target distribution in the supervised learning diargam.

\[ P(\text{Good} \mid X = \mathbf{x}) = \frac{P(\text{applicant has attributes } \mathbf{x} \text{ and is Good})}{P(\text{applicant has attributes } \mathbf{x})} \tag{24.5} \]

similarly

\[ P(\text{Bad} \mid X = \mathbf{x}) = \frac{P(\text{applicant has attributes } \mathbf{x} \text{ and is Bad})}{P(\text{applicant has attributes } \mathbf{x})} \tag{24.6} \]

In order to connect all the previous probabilities, we have to review the Bayes’ rule.

24.1.4 Bayes’ Rule Reminder

Let’s look at both types of conditional probabilities:

\[ P(X = \mathbf{x} \mid y = k) = \frac{P(y = k , X = \mathbf{x})}{P(y = k)} \tag{24.7} \]

and

\[ P(y = k \mid X = \mathbf{x}) = \frac{P(X = \mathbf{x} , y = k)}{P(X = \mathbf{x})} \tag{24.8} \]

And consider the joint distribution

\[\begin{align*} P(X = \mathbf{x} , y = k) &= P(y = k \mid X = \mathbf{x}) P(X = \mathbf{x}) \\ &= P(X = \mathbf{x} \mid y = k) P(y = k) \tag{24.9} \end{align*}\]

Solving for the joint distribution \(P(X = \mathbf{x} , y = k)\) we have that:

\[ P(X = \mathbf{x} \mid y = k) P(y = k) \hspace{1mm} = \hspace{1mm} P(y = k \mid X = \mathbf{x}) P(X = \mathbf{x}) \tag{24.10} \]

Thus:

\[ P(y = k \mid X = \mathbf{x}) = \frac{P(X = \mathbf{x} \mid y = k) P(y = k)}{P(X = \mathbf{x})} \tag{24.11} \]

where the marginal probability \(P(X = \mathbf{x})\) is calculated with the total probability formula:

\[ P(X = \mathbf{x}) = \sum_{k} P(X = \mathbf{x} \mid y = k) P(y = k) \tag{24.12} \]

We can use Bayes’ Theorem for classification purposes, changing some of the notation, let:

- \(P(y = k)\) = \(\pi_k\), the prior probability for class \(k\).

- \(P(X = \mathbf{x} \mid y = k)\) = \(f_k(\mathbf{x})\), the class-conditional density for inputs \(X\) in class \(k\).

Thus, the posterior probability (the conditional probability of the response given the inputs) is:

\[ P(y = k \mid X = \mathbf{x}) = \frac{f_k(\mathbf{x}) \hspace{1mm} \pi_k}{\sum_{k=1}^{K} f_k(\mathbf{x}) \pi_k} \tag{24.13} \]

By using Bayes’ theorem we are essentially modeling the posterior probability \(P(y=k \mid X=\mathbf{x})\) in terms of likelihood densities \(f_k(\mathbf{x})\) and prior probabilities \(\pi_k\).

\[ \text{posterior} = \frac{\text{likelihood} \times \text{prior}}{\text{evidence}} \tag{24.14} \]

24.2 Bayes Classifier

As you know, in Supervised Learning our goal is to find a model \(\hat{f}()\) that makes good predictions. In a classification setting this translates into minimizing the probability of assigning an individual \(\mathbf{x_i}\) to the wrong class. Under this mindset, it seems a good idea to classify an object \(\mathbf{x_i}\) to the class \(k\) that makes \(P(y=k \mid X=\mathbf{x_i})\) as large as possible. That is, classify \(\mathbf{x_i}\) to the most likely class, given its predictors.

An error occurs when an input vector of class \(\mathcal{C}_1\) is misclassified as belonging to class \(\mathcal{C}_2\), or vice versa. Mathematically, a classification error involves having an input \(\mathbf{x}\) in decision region \(\mathcal{R}_1\) being labeled as \(\mathcal{C}_2\): \((\mathbf{x} \in \mathcal{R}_1, \mathcal{C}_2)\), and vice versa \((\mathbf{x} \in \mathcal{R}_2, \mathcal{C}_1)\). The probability of this classification error is given by:

\[ P(error) = P(\mathbf{x} \in \mathcal{R}_1, \mathcal{C}_2) + P(\mathbf{x} \in \mathcal{R}_2, \mathcal{C}_1) \tag{24.15} \]

In order to minimize \(P(error)\) we should find a way to assign \(\mathbf{x_i}\) to whichever class renders this probability to a minimum. Thus, if \(P(\mathbf{x}, \mathcal{C}_1) > P(\mathbf{x}, \mathcal{C}_2)\) for a certain input vector \(\mathbf{x}\), then we label \(\mathbf{x}\) as \(\mathcal{C}_1\).

Alternatively, minimizing \(P(error)\) is equivalent to maximizing \(P(correct)\)

\[ P(correct) = P(\mathbf{x} \in \mathcal{R}_1, \mathcal{C}_1) + P(\mathbf{x} \in \mathcal{R}_2, \mathcal{C}_2) \tag{24.16} \]

In general, for \(K\) classes the probability of correct classification is:

\[ P(correct) = \sum_{k=1}^{K} P(\mathbf{x} \in \mathcal{R}_k, \mathcal{C}_k) \tag{24.17} \]

which is maximized by choosing decision regions \(\mathcal{R}_k\) such that each \(\mathbf{x}\) is assigned to the class for which the joint distribution \(P(\mathbf{x}, \mathcal{C}_k)\) is largest. Using the product rule, we get that this joint probability is:

\[ P(\mathbf{x}, \mathcal{C}_k) = P(\mathcal{C}_k \mid \mathbf{x}) P(\mathbf{x}) \tag{24.18} \]

We should assign \(\mathbf{x}\) to the most likely class, given its input features \(P(\mathcal{C}_k \mid \mathbf{x})\). This is the so-called Bayes Classifier.

Note that the Bayes formula

\[ P(y = k | X = \mathbf{x}) = \frac{ \pi_k f_k(\mathbf{x})}{\sum_{k=1}^{K} \pi_k f_k(\mathbf{x})} \tag{24.19} \]

does NOT tell us:

- how to calculate priors \(\pi_k\)

- what form should we use for densities \(f_k(\mathbf{x})\)

which means there is plenty of room to play with \(\pi_k\) and \(f_k(\mathbf{x})\)

So it’s up to us to decide how to estimate priors \(\pi_k\), as well as what class-conditional densities \(f_k(\mathbf{x})\) to use:

- Normal distribution(s)?

- Mixture of Normal distributions?

- Non-parametric estimates (e.g. kernel densities)?

- Assume predictors are independet (Naive Bayes)?

Keep in mind that a Bayes Classifier theoretically works as long as the terms in \(P(y=k \mid X=\mathbf{x})\) are all correctly specified. Easier said than done in practice.

Interestingly, we can also try to directly specify the posterior \(P(y = k \mid X = \mathbf{x})\) with a semi-parametric approach, for instance:

\[ P(y = k | X = \mathbf{x}) = \frac{e^{\beta_0 + \beta_1 X_1 + \dots + \beta_p X_p}}{1 + e^{\beta_0 + \beta_1 X_1 + \dots + \beta_p X_p}} \tag{24.20} \]